Unique and World Premier Computer Vision Based Onion Sorting Application

Orise developed a world-first vision application for an onion cutting machine, tailored to an innovative Belgian agricultural company.

Problem Statement

Orise has been asked to develop a vision application that would identify and remove wrongly placed onions.

By automating this process step, the company hoped to increase the production throughput, minimize the number of lost onions, and save on labor costs.

Enormous Efficiency Increase

The feasibility study at the start of the project indicated that the retro-fitting of a vision-based sorting application would improve the total machine performance. It would be possible to increase the production throughput, which means that the amount of off-quality products would be reduced too.

A new accuracy study was performed at the end of the project to be sure that the targets had been reached. This study showed impressive results: this project improved the efficiency with 75 to 83%.

Due to this enormous increase, the client already equipped a second line with this sorting application and will install this application on each new cutting machine in the future.

Unique and World Premier Computer Vision Based Onion Sorting Application

This project was a typical example of a classification challenge. A vision system needed to decide when an onion was placed in the right direction or not, irrespective of all variations in sizes, colours, and shapes of the onions. If the onion was placed wrong, it had to be removed from the conveyor system.

Orise applied machine learning techniques to this project to let the computer learn from examples which combination of onion characteristics is the best choice to base a decision on. A particular difficulty that had to be surmounted is that an onion is a natural product, so there are no standards in shapes or sizes which could be set as standard or reference.

A good camera setup will provide better images, which makes the feature extraction easier, but better and more cameras are also more expensive. Therefore, this project started with a feasibility study in order to select the most suited type and number of cameras per production line.

The next important step during this process is the development of a classification model, because the combination of camera and classification model will determine the overall accuracy. Each time the camera takes a new image of an onion on the conveyor system, it is passed to the processing unit. This unit extracts relevant information from the new image to decide if the onion is in the right position and needs to be removed or not.

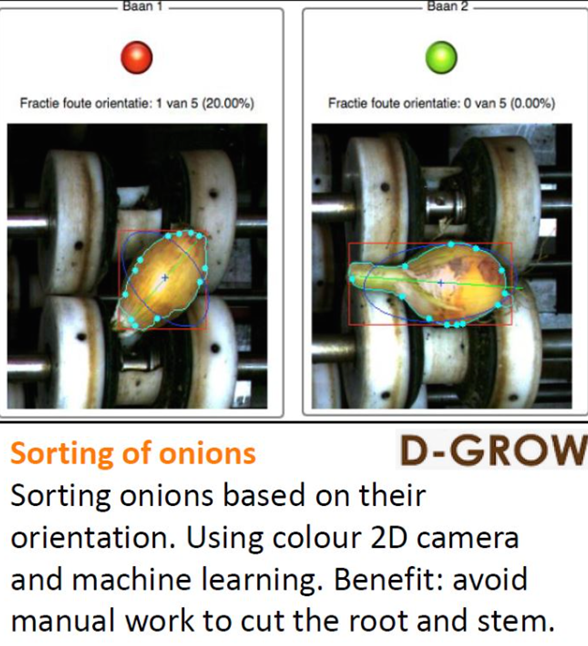

The relevant features were engineered and implemented during the model development phase. The selected features must allow the model to detect the location of the head and the roots since these elements determine the orientation of the onion. Some of these features are visualized in the next figure.

Once the onion is detected in the image, the best fitting rectangle is placed in red around the onion. This rectangle is followed by the center line in green. This center line is then used to identify the best orientation of the purple ellipse. These three features, that are visible in the figure, give already a first indication of the orientation of the onion.

However, the whole application is more complex since it is used during the whole harvesting season, which means that there is a large variation in onion geometry and metrics. Furthermore, different sorts of onions will pass through the same sorting machine, resulting in a lot of variation. Therefore, extra features were needed to deal with all these variations.

In order to deliver a good performing model, extra features like inflection points, prominent onion lines on the surface and other features like colour variations, etc. are used in the model. All these features are inputs to a computer model and the model output is used to decide if the onion must be removed or not.

Wrong placed onions are eliminated by an actuator that receives a signal from the PLC. This signal is triggered by the model output, so the processing unit is connected to the PLC in order to control the actuator. Each onion that is removed will be transferred back to the start of the process in order to have another attempt at giving it the right orientation mechanically.